Partially Automated Assessment of Programming Assignments with Check50

CS50 is a set of command line and online tools to support automated checking of code assignments. It works in combination with a cloud infrastructure developed and paid for by Harvard University who use it in their widely popular eponymous online classes. All this is mainly geared towards a fully automatic approach in order to deal with very large courses

In 2020 the UoL moved to a new learning management system called Canvas that made changes to teaching delivery necessary. I took this as opportunity to trial CS50 in COMP122, our intro to Object-Oriented-Programming module in an attempt to deal with an increase in student numbers and to reduce bias in marking.

Below I outline our assessment workflow that ties up check50 and Canvas. I discuss lessons learned on automated grading in general and technical problems and my solutions that are specific to CS50.

Our Assessment Workflow

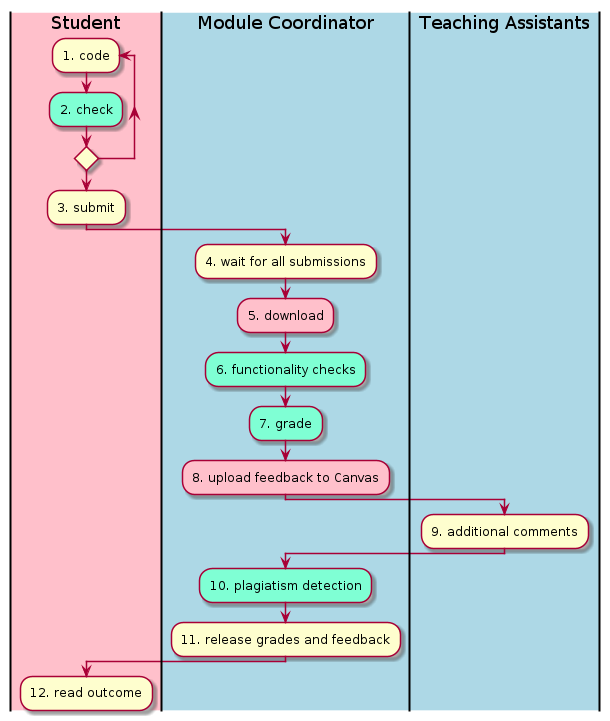

For various reasons we took a Goldilocks approach between full automation and distributed manual marking as done in previous years. Naturally, automating functionality checks speeds up things and reduces (variation in) bias, whereas allowing TAs to overrule results provides necessary human oversight.

The combined workflow for programming assignments is in the figure. Actions in green are fully automated and fast. Actions in red can be automated but require some technical know-how for module-specific adaptations. Most time is spend at stages 4 and 9 due to individual deadline extensions and manual inspections.

After students submit their code, we run functionality checks and map the results to grades and feedback text. All this is done fully automatically. Only afterwards do TAs get to see the student code together with grades and suggested feedback text. They can overrule decisions if necessary and make additional comments to explain the outcomes.

In more detail, the steps are as follows.

- Students write their programs.

-

Students may run automated checks on their attempt to get personalised feedback. They are encouraged to discuss this with TAs during lab times in case the feedback is not clear to them. Running the checks requires a GitHub account and the command-line tool check50 which comes pre-installed on the recommended online IDE. The checks available at this stage are a subset of those used for marking later on and are hosted on a public GitHub repository.

-

Students submit via submit50, a command-line tool that takes as an argument a “slug” that identifies the assignment. This requires the student to have a GitHub login and also to have signed up for the course on https://submit.cs50.io with the same account. The latter is necessary for teaching staff to get access to student submissions. Many students will already be on GitHub and for those who aren’t before the term this is a valuable authentic learning opportunity. We provide check50-based automarkers for programming labs as well, which prepares students for this unusual submission process before assignments.

-

We wait for all submissions. At this point we test and debug the full set of checks on those who have already submitted and make adjustments.

- We download submissions.

Technically what happens when students submit is they push their code into a private

GitHub repository (into a branch called after the slug) to which teachers have access.

The MC downloads a CSV file for all submissions for a given slug on https://submit.cs50.io.

This lists (GitHub) username, timestamp and (git) commit hash, so that getting one submission amounts to (git) cloning a specific url.

As these are not publicly listed, this requires that

- the student has given us access to these submissions. This is done by “joining the course” as mentioned in step 3, and

- that the MC authenticates themself using a (GitHub) API token. I have written this script to clone student submissions into separate directories.

-

check50is run on all student submissions. This will create areport.jsonfile for each submission that can be programmatically read. I wrote some scripts to run check50 and other tools in bulk and usecheck_all.shto run the automarker. For safety reasons I execute this inside a sandbox (I use cli50, a docker image + setup-script provided by CS50) -

I use

grade50to automatically turn the report file into grades and feedback comments. This is based on a marking scheme that sets out how many points each passing check is worth. It also allows you to group them into “criteria” and pre-format comment strings if a check fails or passes (see the README on my related GitHub project for an example). Grade50 was written by me, since the official CS50 tool suite does not have a grading tool to turn check results into marks. Just like all CS50 tools, it is written in python and can be installed viapipfrom the python package index. I use thegrade_all.shscript to run the tool on all submissions. For each one, this produces agrades.jsonfile. -

I then upload student submissions and grades to Canvas. This requires a mapping of Canvas and GitHub user names for all students as well as another (Canvas) API token for authentication. In fact what I do is make a dummy submission in the name of each student (the Canvas assignment type is turned from “no submission” to “text submission” first). That lists the students GitHub account, link to the submission, and timestamp (Canvas uses this to calculate late penalties etc). The data is read from the CSV file from the course page on https://submit.cs50.io used in step 3, and a local mapping of Canvas and GitHub usernames. I use the

canvas_upload_submission.pyscript to make dummy submissions andcanvas_grade_submissions.pyto add grading info that includes a grade and textual feedback for several (Canvas) “rubric criteria”, which were created manually for the assignment on canvas beforehand. In my assignments there was usually one rubric criterion for each source-code file the students were supposed to hand in. -

TAs inspect and comment on each student’s submission via Canvas’s “SpeedGrader” marking tool. I find it most effective to get all TAs into a room (Teams meeting this year) to get this done quickly together instead of waiting another week or more for TAs to do it in their own time.

-

I use

compare50for plagiarism checks. This comes with CS50 and is extremely easy and quick; It does a 1:1 comparison of every two student submissions (subdirs) in a given directory and produces a fancy html/js page for each, listing the degree of similarity. - Sending out results to students is now only a matter of making the grades visible on the Canvas gradebook.

- Students get to see the outcomes directly on their Canvas “grades” pages.

Lessons Learned on Automarking in general

LL1: Speeding up assessment means more time spend on support

A big benefit of partially automated marking is that larger proportion of teaching staff’s time can go towards supporting rather than assessing students.

Giving TAs automatically generated feedback stubs allows them to focus exclusively on those parts of a submission where failed tests indicate issues that require a second look and perhaps further explanations.

In the past two years it took three weeks to grade and student’s only barely got their feedback before the next deadlines. With the new workflow we were so far able to reduce this to just over one week and most of that time was spend waiting for late submissions. The actual marking sessions with TAs were noticeably less time consuming as well, all with an extra 100 submissions under consideration.

LL2: Automating functionality checks reduce marker bias

One of the main benefits of automated functionality checks is that one can guarantee a consistent test coverage, meaning what exactly the student code is checked against, as a base line. This reduces bias that is unavoidable when code is assessed manually by different people which was nicely confirmed by several plagiarism cases that we found later, all of which received very similar (\(\pm 5\%\)) grades.

Having automated checks in place allows to quickly and consistently fix grading mistakes. For example, in the marking of our first assignments we accidentally deduced points because our model solution was inconsistent with the latest assignment brief. Students have convincingly argued their case on the public course forum and I was happy to admit my mistake and to fix the grading. But rather than fixing only the grades for those few who voiced concerns we were able to quickly re-run the automarking scripts and retrospectively add points for all 86 affected students.

LL3: Expect checks to be buggy

As with any software artefact, bugs happen and checks are not always perfect from the start. I was lucky to have extremely competent and enthusiastic TAs on board but students did not always appreciate it even when faulty checks were repaired quickly and some complained that faulty checks should not have been released in the first place. However, assuming that checks are refined and re-used in future this is not a long-term issue.

LL4: Exercises can allow creative solutions or finely granulated marking, not both

Creating good assignments is difficult even without automarking in mind but one needs to take extra care when designing or adapting exercises so that attempts can be automatically processed.

Often, assignments are written in a way that allows or indeed encourages different solutions. This is great to make students think for themselves and realise that for most problems there are different ways of solving them, each with pros and cons. It should be clear that when it comes to writing checks for the flexible approach one cannot go much beyond basic input/output checks. This is fine in many cases but it makes it possible to hard-code answers rather than computing them in case the checks are known.

The other end of the spectrum would be to specify exactly how an exercise is to be solved so that students learn how to code towards non-negotiable specs/interfaces. In the OOP module I teach, many learning outcomes to be tested are necessarily of this form. For example when we ask students to implement a certain class hierarchy, or write classes to implement some (Java) interface, inherit methods, or include private attributes. Such things can be checked but require the exercise to be very specific in what it asks for.

Both approaches have their appeal and ideally one would mix and match depending on the subject at hand. Some students will have an easier time to work on flexible exercises and struggle with explicit specs and vice versa. A combination will not favour one mode of thinking. It is also often much easier to write input/output checks so including flexible exercise parts makes it easier to write more checks, which results in better feedback. The second type of exercise needs to be very carefully set, to avoid students missing out on points for later parts because of typos or small mistake in earlier, prerequisite parts. For example, if a method has an unexpected signature, then functionality checks cannot run. However, the “inflexible” sort of exercise allows a much finer grading because we can test and award points for specific aspects of the specified design.

Either way, assignments need to be stated precisely enough for students to know what is needed. I would often included a description of a methods signature, then this same signature as a line of code, followed by a description of the required functionality and an example. Early public checks (see below) give students the opportunity to test their understanding of the exercise and discuss this on a forum for clarification.

LL5: Early feedback is good for everyone!

Having automated checks in place means that these can be shared with students early on. We publish checks for basic assignment parameters, for example

- has a file called XYZ been submitted?

- does XYZ compile without errors?

- does class X have a method with signature Y?

as well as a few checks for input/output pairs from the assignment sheet. This way students can already get some personalised feedback before they submit. This allows them to fix silly but costly mistakes of not following the spec precisely for parts of the assignment that ask for a specific design. This lead to a noticeably reduced number of submissions who lost out due to non-compiling code or typos in file names etc.

A welcome side effect is that students can now report bugs or point out inconsistencies in our checks before the deadline. This helps us to minimise technical errors on the part of the setter and increases shared understanding of the assignment requirements.

Seeing that their code passes the public checks gives them confidence in their attempt before submitting. Most students seem to make use of, and appreciate early checks: 76 out of 100 participants in a recent mid-term survey either agreed or strongly agreed that “having automated feedback is helpful rather than distracting”, whereas only 10 disagreed. The quotes below are from our final module survey.

- “I like the automarking system. Especially the check50 check because it allows for me to estimate how well i’ve done before submitting”

- “Using the CS50 IDE and submit50 was very useful and much less stressful than manually having to submit the files.”

- “Submission and check system by far the best used for any module”

- “The checking system was clever, and was a good source of instant feedback.”

Challenges specific to CS50

Of course one can write module specific automarkers using shell scripts, unit tests etc. but those are often fragile, difficult to maintain and therefore not very useful in the long term once another module coordinator takes over. Ideally, one would use a standardised testing framework across different modules (and programming languages) to give students a uniform way to interact with the assessment process throughout their studies. Below we comment on the suitability of CS50 tools for this, and point at specific technical issues.

C1: Writing checks.

Check50 offers an easy and standardised way to write checks for any programming language, with dependencies and all. It is really well documented and in particular input/output checks are trivial. Check50 allows to write checks that control a shell on a (Ubuntu linux) sandbox image. This way you can call compilers, interpreters and student code safely. Consider the following example, taken from the CS50 API docs.

import check50 # import the check50 module

@check50.check() # tag the function below as check50 check

def exists(): # the name of the check

"""description""" # this is what you will see when running check50

check50.exists("hello.py") # the actual check

@check50.check(exists) # only run this check if the exists check has passed

def prints_hello():

"""prints "hello, world\\n" """

check50.run("python3 hello.py").stdout("[Hh]ello, world!?\n", regex=True).exit(0)

Similarly, one can easily have a check if an existing file compiles, and if so it the resulting class can be instantiated, and then if it can be executed/called etc. Still, getting the hang of how to write checks takes some time. Writing checks for Java in particular is tricky once you go beyond I/O tests and we ended up writing some real tricky reflection/introspection code.

I ended up writing a few add-ons to make it easier to automate Java specific tasks, speed up checks and avoids unnecessary traffic. These add-ons are distributed on the Python Package Index (pypi) which allows them to be declared as dependencies for checks and labs and pulled in automatically when running checks. The sources are available here:

- check50_java to run compiler and interpreter and set

CLASSPATHs. - check50_junit to run JUnit5 unit tests and interpret its reports.

- check50_checkstyle to run the static code analyser checkstyle and interpret its results.

While it is great that the check50 infrastructure is flexible enough so that one can integrate such specialised tools, it would be much more convenient if standard unit testing frameworks were directly supported via imports.

C2: GitHub lock-in

Although one can run checks from a local directory on code in another local directory,

check50 is really designed to read the checks – problem sets – from a public GitHub repository.

If you want students to run checks online (which transparently happens on AWS servers hosting a Ubuntu image, paid for by Harvard),

they will require a GitHub account. The same is true if you want to take submissions via submit50 like we did for COMP122 this year.

C3: Grading

Check50 is great for defining and running checks but it can only tell you which checks fail/pass. There is no way to declare how much a passed check is worth in terms or marking. This means that one needs to find another way to map checks into marks. I have written the tool grade50 for that. It can be run locally and takes a check50-produced report file and a (YAML) marking scheme to produce grades and feedback texts.

C4: No hidden checks or multiple dependencies

You cannot easily hide some private checks from the students and benefit from running checks on public cloud servers at the same time. To keep some checks for yourself for later marking, it is necessary to store (and develop) them somewhere else and ultimately run the final checks on a private machine. I ended up running checks on my laptop, which took about 3-4h for all students. This can be problematic when you need to re-run checks after fixing bugs.

One reason why it’d be helpful to hide checks in check50 is that checks can only depend on at most one other check. This sounds like a minor issue but several times I found myself needing multiple dependencies. For example, you want to run the functionality check for a method only if the student code contains this method (i.e. has got the correct argument and return types) and also, their class can be instantiated (i.e they include a constructor with the right signature). One way to get around this problem is to introduce “dummy” checks that simply repeat both dependency checks. Now it’d be good to hide those from students.

C5: Releasing feedback

It is possible to annotate student-code with inline comments on GitHub. However, for this to work TAs have to be registered as teachers on the online version of the course on https://submit.cs50.io. It is not possible to hide these comments from the students and only release them once the marking/grading is complete.

C6: Canvas integration

Check50 and its tools are not developed with third party learning and teaching platforms in mind and there is no easy way to integrate them with Canvas. Luckily, Canvas can be scripted using a (web) API but this requires some technical know-how to set up and possibly adjust scripts. My scripts can be found on GitHub but they are by no means robust. Naturally, it is necessary to get a mapping between student’s Canvas ID, University ID and GitHub accounts. The first two are unproblematic and accessible through Canvas, but matching GitHub and CanvasIDs requires that the students themselves provide that info in the first place. I set up a “join the course” dummy assignment in which students were supposed to hand in the URL to their GitHub profile but surprisingly many struggled with that initially. This is just another hurdle to overcome until the course runs smoothly.

Conclusion

After piloting CS50 in the 2020 iteration of COMP122 I believe that partially automated marking is a massive help and step forward from distributed manual marking. It is arguably much more fair than manual grading, and the results of the end-of-module survey shows that this is reflected in students’ perception of the process: 71% of the 112 participants agreed or strongly agreed with the statement that “The marking was unbiased”.

The trial shows that automated marking using the CS50 tools can be made to work well if the module coordinator is able and willing to get their hands dirty. However, there were significant parts of the whole process that needed to be implemented, especially the grading and synchronisation with Canvas. Is it realistic to ask the majority of UG modules to switch to CS50 and Canvas? Probably not.

Let me finish on the point that this is possibly not the end of our automarking story. Together with Rahul Savani we were simultaneously evaluating the commercial alternative CodeGrade that promises better integration with Canvas and 24/07 support for teaching staff, and plan to run an extended pilot in 2021/22. We are grateful for financial support from UoL’s Digital Everywhere and Faulty Education Enhancement group to implement these improvements.